This is the final part of our Spectre retrospective. In this post, I’ll talk about the technology behind a computational long exposure, a few UI innovations, and our plans to share code between apps.

The Computational Long Exposure

We started building Spectre, among other things, to get around hardware limitations. Most iPhones can expose an image for a maximum of 1/3rd of a second, and only the latest iPhones (XS and XR) support a maximum exposure time of one second. That’s a far cry from the several seconds you need for an interesting long exposure.

Why does Apple cap the hardware at one second? While it has never publicly commented on this, the longer a sensor is active, the more heat it generates. A large DSLR has plenty of space to dissipate heat while the compact, airtight body of an iPhone is another story. Heat increases sensor noise, and more importantly, it can damage components in your iPhone.

It’s true other smartphones support several second exposures. They use a different set of components in a different configuration that dissipates heat in a different way. (It’s also possible those phone markers are A-OK with the stress on the components.)

Every design is about tradeoffs. Apple puts long exposures way at the bottom of their priorities, far below battery life, processor speed, water resistance, and so on. After all, a tiny, tiny fraction of users would ever use it, since it’s useless without a tripod. Long exposures require the phone to be perfectly stabilized to be sharp, and most people shoot handheld.

That brings us back to Spectre: As we were developing the app, we realized an absolute killer feature would be handheld stabilization. By enabling a user to shoot without a tripod, we could bring long exposures to a much larger audience. In fact, we never even use native one-second exposures.

Shorter Exposures are Better. What?

In theory you could create a nine-second long exposure by simply shooting nine one-second exposures, and merging them together. However, each of those handheld one second exposures would be blurry.

There’s a photography rule of thumb that you should shoot handheld photos at the reciprocal of your lens focal length. In easier terms: with a 50mm lens, shoot 1/50s, with a 100mm lens at 1/100, etc. The iPhone X has a 28mm focal length, so we shoot 1/30s, the closest supported frame rate. For a nine second exposure, that works out to 270 photos.

It isn’t as simple as calling camera.capture() 270 times. The iOS camera system does a lot of complex things every time you call it, such as synchronizing flash and optical-image-stabilization to fire exactly the moment the photo is taken. Every capture gets post processing, such as noise reduction (whether you want it or not). All these things add latency and create gaps in the long exposure. Instead of using the photo capture APIs, we use the video API and capture 4k video frames.

Of course, it’s easier said than done. Each frame is about 50 megabytes of memory, and with subsampling we can get it down to 25mb, but 270 frames still weighs in at over six gigabytes of memory.

The most powerful iPhone has less than two gigs of memory. Even if we tried swapping to disk, there’s no way it would work in realtime.

So we build a long exposure on the fly. As each frame video frame arrives, we merge it with our master frame in under 33 milliseconds, and then release that frame back to the system. It lets us stay below a few hundred megabytes of memory.

This would be impossible without our Metal-based video processing engine, which started in Halide. That brings us to our next effort:

Code Reuse

The worst possible outcome of supporting two apps in parallel is doubling our workload. We don’t want to slow down our work on Halide. Fortunately, a significant portion of Halide is reusable— where it makes sense.

Take our Photo Reviewer.

It seems simple, but there’s a surprising amount of machinery. It fetches assets from iCloud, maintains a local cache for snappy responsiveness, throttle requests when we’re under memory pressure, and more. That’s before we even get to its UI! Building our photo reviewer from scratch would take months.

For Spectre, we converted our photo reviewer into a reusable component. Of the 20,000 lines of code that make up the app, about 7,000 are the photo reviewer. During the modularization, we cleaned up the code, fixed a handful of bugs, and found a few ways to speed things up. These improvements will make their way back to Halide in a future update.

At the same time, we’re going to be conservative with code reuse. In a perfect world, we could just display our photo reviewer with:

let reviewer = PhotoReviewerViewController()

self.present(reviewer, animated:true)

But there are per-app behaviors that complicate things. A classic tradeoff with “write-once, run everywhere” is ending up with a system that feels out of place everywhere. For example, Halide uses yellow as its accent color, while Spectre uses green.

The easy way out is to choose an app-neutral color. It’s a perfect metaphor for cost-cutting code reuse: just make everything bland. The better solution is to build a theming API for our photo reviewer, so each app can customize the accent color.

protocol PhotoReviewerTheme {

var primaryColor:UIColor { get }

}struct HalideTheme:PhotoReviewerTheme {

let primaryColor:UIColor = .yellow

}let reviewer = PhotoReviewerViewController(theme:HalideTheme())

This is a simple change, but there are deeper deviations at the product level. For example in Halide, you want to see details like the different available file formats in the upper right.

That adds nothing to Spectre and just clutters the interface. So we have an API for specifying which panels to display in the reviewer.

let configuration = PhotoReviewController.Configuration(

theme:HalideTheme()

showAllResources: true,

showMetadata: true,

shareVideo: false,

logoView: HalideLogoView())

let reviewer = PhotoReviewerViewController(configuration)

Our simple code from earlier is sprawling with configuration options, and the same is true of the internals. Every option requires another codepath, and over time a simple library can become bloated framework that requires more energy than just writing the same code twice. We aren’t near that point, but it’s a tradeoff to keep an eye on.

Another tradeoff is the inherent risk of any dependency. When the photo reviewer was just part of Halide, we could make drastic changes and only have to verify it didn’t break one app. Now there’s a chance a change will appear just fine in Halide but subtly break in Spectre, or vice versa. (We can reduce these surprises with the help of automated tests, but they’re no panacea.)

Evolving our Custom Controls

Sebastiaan and I both think an iPhone app should feel native to the platform, and we do our best to adhere to Apple’s Human Interface Guidelines. As Sebastiaan pointed out in his design retrospective, very often the “boring” solution is best.

Within those guidelines, there’s plenty of room for innovation. For Halide’s iPhone X redesign, we had to find a way to both “embrace the notch” while not drawing attention to it. What if we put controls up there?

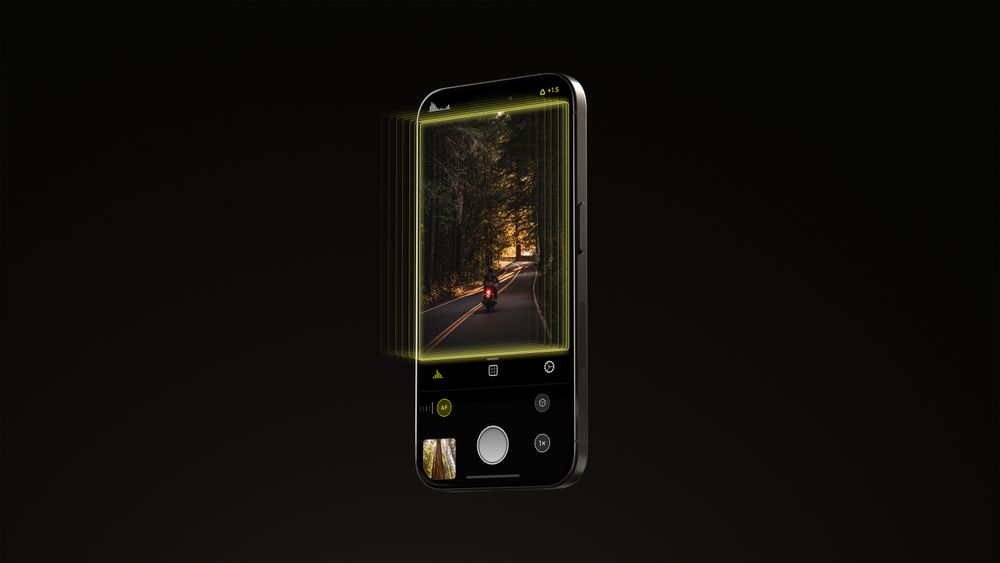

In Spectre, we found an opportunity to innovate in our exposure duration dial.

Keeping true to iOS paradigms, we wanted the dial to have momentum and rubber-banding, similar to a scroll view. However, there are no native controls that support rotational momentum, let alone ones with spring physics. So we rolled our own.

Our dial is a subclass of UIControl that does its own pan gesture interpretation. When you flick it, we calculate the torque based on your flick angle and apply it to our own tiny physics engine.

Saying “physics” might be generous. The first version of our engine was physically accurate, but after experimenting with different mass, friction, and spring tensions, it was very hard to tune to our liking. It’s a common challenge in game programming, with artists fidgeting over tiny knobs for hours and never being satisified.

Then there’s numeric springing, which is in turn based on the soft constraint work by Erin Catto, creator of the the Box2d physics engine.

Numeric springing lets us tune the important effects like velocity and bounciness, and the engine figures out the values to get our desired effect, through the magic of differential equations.

Finally, you might notice that the dial always stops in perfect one-third increments. When you flick the dial, we predict what “wedge” it will stop at. As the dial slows down, we attach a spring to the center of the wedge, so things always fall neatly into place.

This is exactly how some physical dials on actual cameras work. A small bearing forces an indented ring to be pushed towards one number as the bearing falls into the detents between the indentations.

Conclusions

Building Spectre has been an amazing step forward for us. While it adds more overhead, such as supporting shared libraries, we think it will lead to both Halide and Spectre becoming better apps.

As we’re squashing bugs and making code more resilient, and we’re getting more value out of components than if they were one-and-done for every app.

And that’s a solid foundation for our next big things — We’re very excited for the months ahead. We can’t wait to show you what we’ve been working on.