After four years, we have a new budget-conscious iPhone. Like previous SE, it reaches that price point by sticking to components from previous generations. iFixIt found the the camera sensor interchangeable with that of an iPhone 8.

If this were a story about hardware, we’d just tell you to read iPhone 8 reviews. In short, we think this is a fine camera, though it’s three years old. Sebastiaan is actively out shooting RAW shots with it to see just how well it stacks up.

But this story is about the software. This iPhone goes where no iPhone has gone before with “Single Image Monocular Depth Estimation.” In English, this is the first iPhone that can generate a portrait effect using nothing but a single, 2D image.

“Doesn’t the iPhone XR do that? It also only has a single camera!”, you might say. As we covered previously, while the iPhone XR has a single camera, it still obtained depth information through hardware. It tapped into the sensor’s focus pixels, which you can think of as tiny pairs of ‘eyes’ designed to help with focus. The XR uses the very slight differences seen out of each eye to generate a very rough depth map.

The new iPhone SE can’t use focus pixels, because its older sensor doesn’t have enough coverage. Instead, it generates depth entirely through machine learning. It’s easy to test this yourself: take a picture of another picture.

You probably want to use Halide, not just because we built it, but because the first-party camera app only enables depth when there are humans in the photo. We’ll discuss why, shortly.

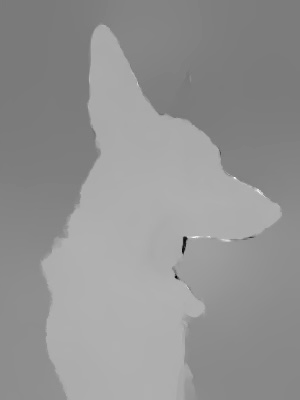

Let’s load this photo full screen and snap a photo with our iPhone SE:

An iPhone XR sees a mostly flat surface, the computer monitor. It seems to refine this depth map using the color image, and guesses that the lump in the middle sits slightly in the foreground.

The iPhone SE2 generates a totally different depth map. It’s wrong, but amazing!

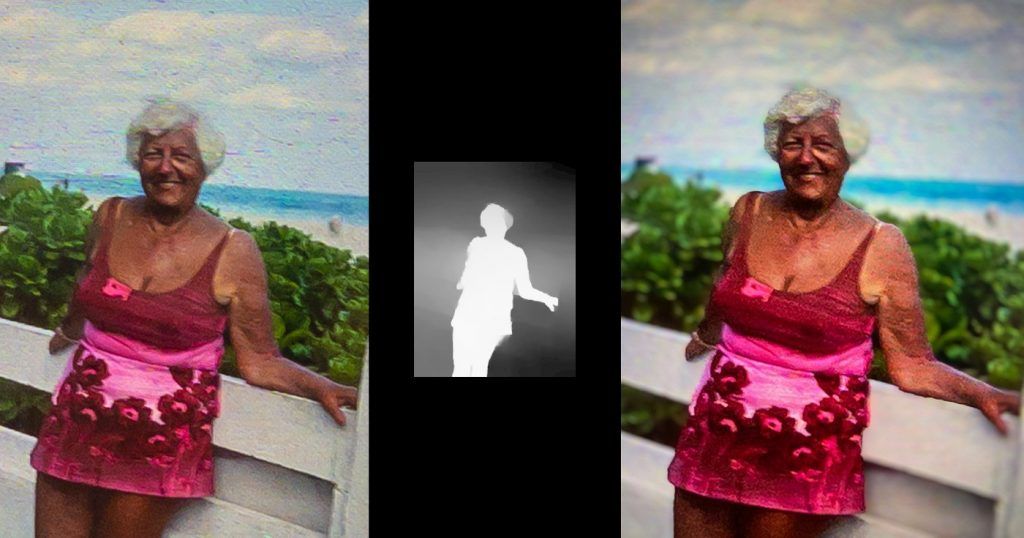

Hmm. While cleaning out my dad’s house, I came across 50 year old slide film of my grandmother…

Wow! Ok, this is an awesome party trick, but a contrived test. How well does this stack up in the real world?

The Real World: Mostly Amazing Results

So why does Apple prevent the first party app from shooting non-people? They have a second process that works really well with humans in the frame. Without humans, it sometimes fails in weird ways.

Here the neural network confused the trees in the background with June’s head. Maybe it was confusing them for antlers?

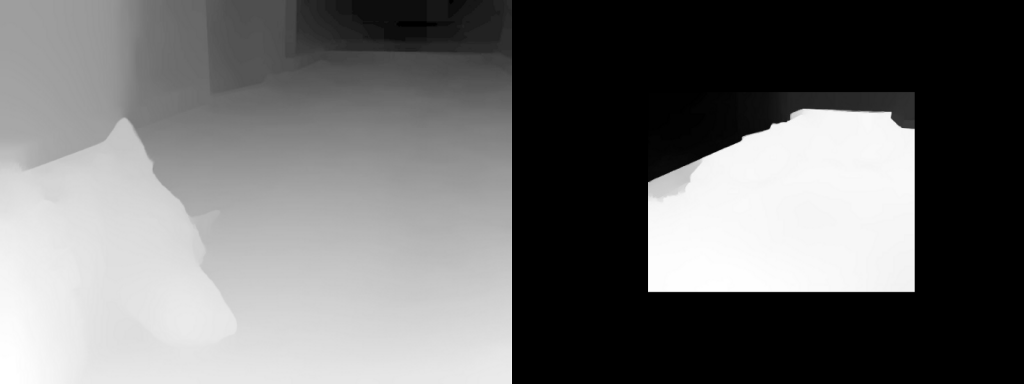

It also appears that the depth map is really good at segmentation (masking different parts of an image), but it’s hit or miss with actual distances. Here we have June wrapped up in existential dread:

I took the above photo using an iPhone 11 Pro and the SE2, and it’s obvious that having multiple cameras generates way better data. While the iPhone 11 captures the full depth of the hallway, the SE2 misses the floor receding into the background.

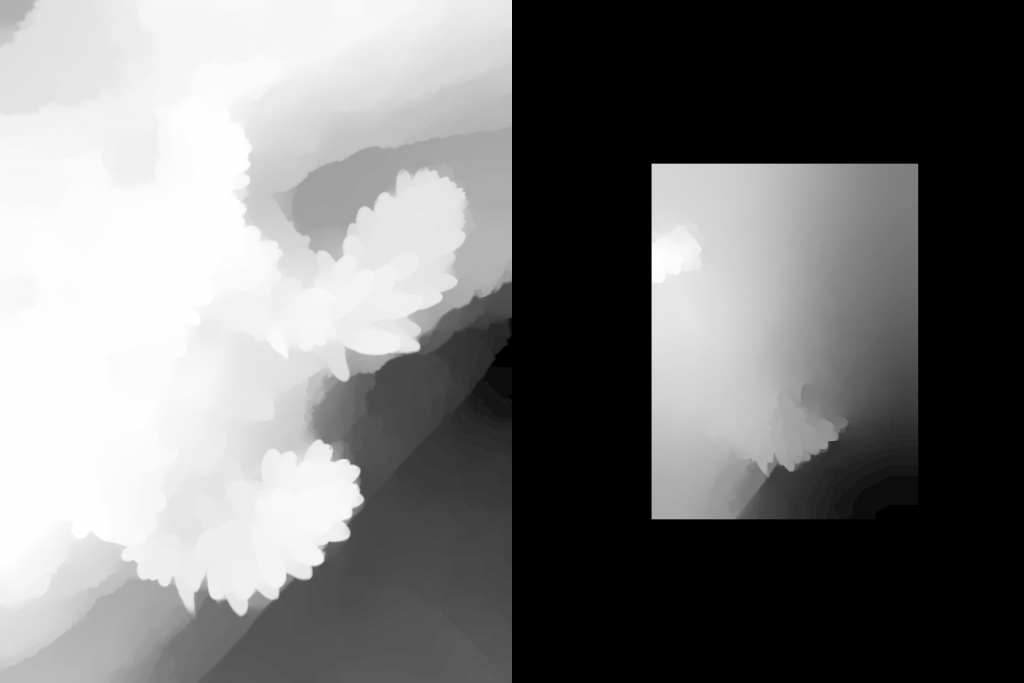

What does this mean for real world photos? Let’s use this succulent as a subject, since it has some nice layering going on.

The iPhone 11 Pro’s shows distinct edges in the depth map. The SE2 gets the general gist of things. Maybe the neural network was exposed to a lot of dogs, but not as many plants?

What is the final result? The blur all just blends together, rather than elements in the foreground getting a different blur strength than elements in the background.

you prefer the iPhone SE2’s look, you always can recreate it with an iPhone 11 Pro. You can’t get the distinct layering of the iPhone 11 Pro on an SE2.

Layering is where Apple’s second machine learning process plays a roll. Alongside the iPhone XR, Apple introduced a “Portrait Effects Matte” API that could detect humans in a photo and create a very detailed mask. In fact, this matte is higher resolution than the underlying depth data.

As long as the subject in the foreground is sharp and in focus, most people will never know you’re playing fast and loose with the background blur.

So back to the question, “Why does Apple limit this to people?”

Apple tends to under-promise and over-deliver. There’s nothing stopping them from letting you take depth photos of everything, but they’d rather set the expectation, “Portrait Mode is only for people,” than disappoint users with a depth effect that stumbles from time to time.

We’re happy that this depth data — both the human matte and the newfangled machine-learned depth map — was exposed to us developers, though.

So the next question is whether Machine Learning will ever get to the point that we don’t need multi-camera devices.

The Challenge of ML Depth Estimation

Our brains are amazing. Unlike the new iPad Pro, we don’t have any LIDAR shooting out of our eyes feeding us depth information. Instead, our brains assemble information from a number sources.

The best source of depth comes from comparing the two images coming from each of our eyes. Our brains “connects the dots” between the images. The more they diverge, the further an object must be. This binocularapproach is similar to what powers depth on dual camera iPhones.

Another way to guess depth is through motion. As you walk, objects in the distance move slower than objects nearby.

This is similar to how augmented reality apps sense your location in the world. This isn’t a great solution for photographers, because it’s a hassle to ask someone to wave their phone in the air for several seconds before snapping a photo.

That brings us to our last resort, figuring out depth from a single (monocular) still image. If you’ve known anyone who can only see out of one eye, you know they live fairly ordinary normal lives; it just takes a bit more effort to, say, drive a car. This is because you rely on other clues to judge distance, such as the relative size of known objects.

For example, you know the typical size of a phone. If you see a really big phone, your brain guesses it’s pretty close to your eyes, even if you’ve never seen that specific phone before. Most of the time, you‘re right.

Cool. Just train a neural network to detect these hints, right?

Unfortunately, we’ve described an Ill Posed problem. In a Well Posedproblem, there is one, and only one solution. Those problems sound like, “A train leaves Chicago at 60 MPH. Another train leaves New York at 80 MPH…”

Some images just can’t be solved, either because they lack enough hints, or because they’re literally unsolveable.

At the end of the day, neural networks feel magical, but they’re bound by the same limitations as human intelligence. In some scenarios, a single image just isn’t enough. A machine learning model might come up with a plausible depth map, that doesn’t mean it reflects reality.

That’s fine if you’re just looking to create a cool portrait effect with zero effort! If your goal is to accurately capture a scene, for maximum editing latitude, this is where you want a second vantage point (dual camera system) or other sensors (LIDAR).

Will machine learning ever surpass a multi-camera phone? No, because any system will benefit from having more data. We evolved two eyes for a reason.

The question is when these depth estimates are so close, and the misfires are so rare, that even hardcore photographers can’t justify paying a premium for the extra hardware. We don’t think it’s this year, but at the current pace that machine learning moves, this may be years rather than decades away.

It’s an interesting time for photography.

Try it yourself

If this made you more curious about the cool world of modern depth-capture. we launched our update for Halide last Friday — iPhone SE launch day.

If you have any iPhone that supports Portrait mode, you can use Halide to shoot in our ‘Depth’ mode and view the resulting depth maps. It’s pretty fun to see how your iPhone tries to sense the wonderfully three-dimensional world.

Thanks for reading, and if you have any more iPhone SE questions, reach out to us on Twitter. Happy snapping!

[Editor’s Note: In an earlier version of this post we said the iPhone SE2 doesn’t have focus pixels. It does have focus pixels, but they don’t have enough coverage to generate a depth map.]