At Halide HQ, we’ve been busily working away on a very deep, technical analysis of what’s new in iPhone 12 cameras this year. All four of ’em. On Friday, we got our hands on the final iPhones in this year’s line-up, the iPhone 12 mini and the iPhone 12 Pro Max.

The Pro Max was the wildcard this year. Apple devoted a whole section of their keynote to it, and we got a ton of questions about it on our Twitter. Some reviewers on the internet panned it as an indistinguishable improvement from the iPhone 12 camera, while others called it quite good.

We’ll get into the iPhone 12 camera — we have a lot of thoughts on that. But the iPhone 12 Pro Max tests were quite surprising. So surprising we’ve decided to create this whole separate post about it.

What Makes the iPhone 12 Pro Max Special

We had an advanced look at the Pro Max specs, but to recap, it has:

- A 47% larger sensor

- A faster ƒ/1.6 lens

- A brand-new ‘sensor shift’ stabilization system for low-light

- ISO sensitivity is 87% higher

- A new telephoto lens, reaching a new length of 65mm (full-frame equivalent)

Specs are cool, but now that we have the hardware we can take a deeper look at how these changes affect real-world photography.

A bigger sensor

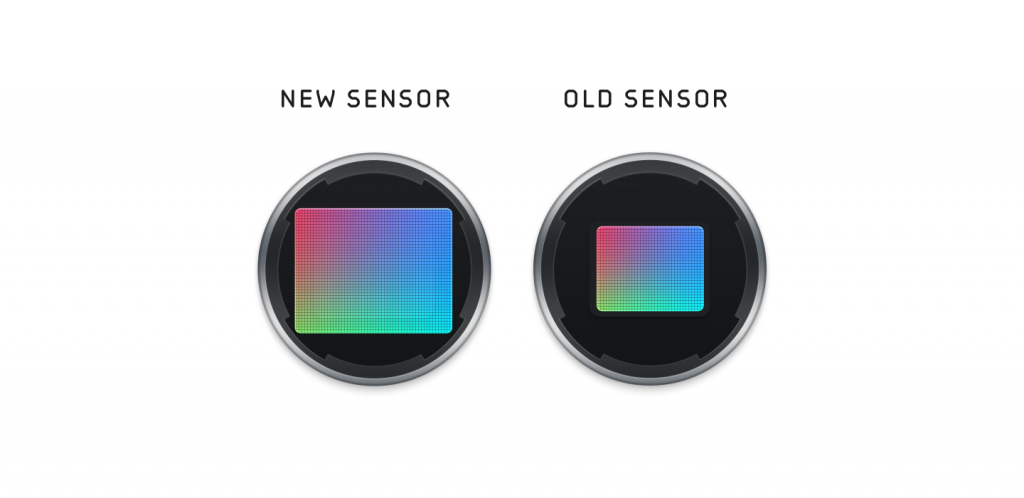

The biggest news was that the iPhone 12 Pro Max got a bigger sensor. 47% bigger. Sounds big. Is it? What does that even mean? Let’s visualize it:

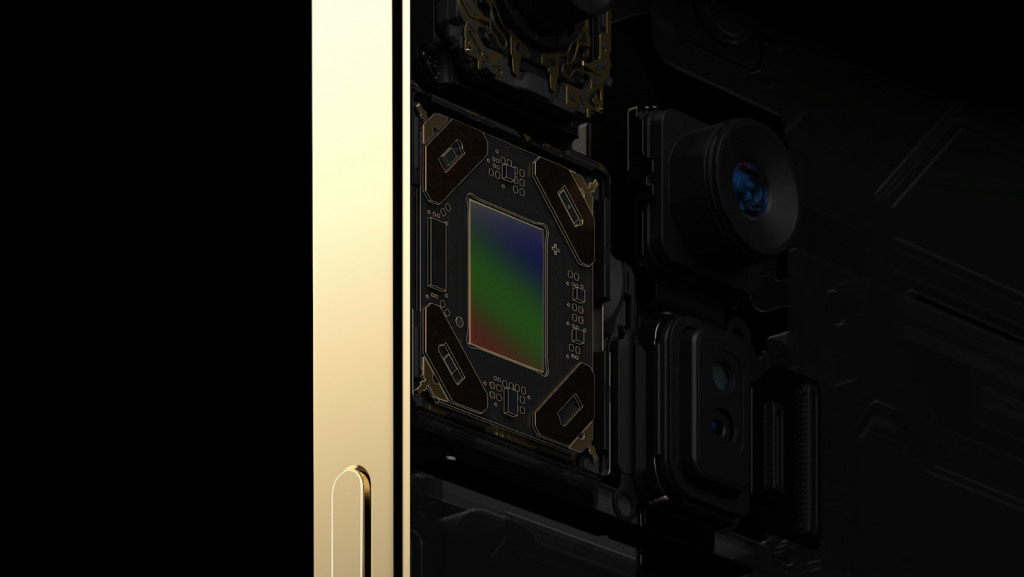

That’s significant. When iFixit took this chonker apart, they also noted how large it was:

Ok, practically speaking, what does this mean?

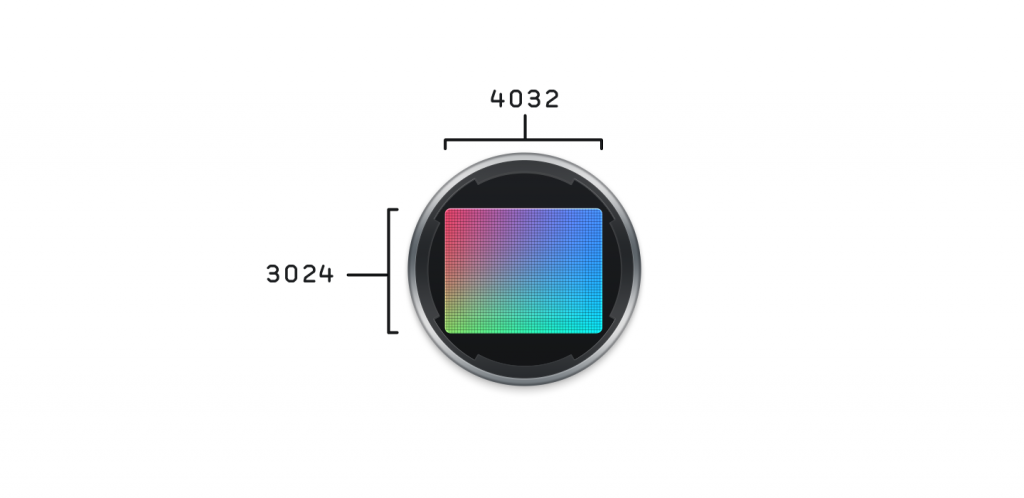

Imagine a camera sensor as a collection of lots of smaller sensors. Each collect red, green, or blue light. These sensors are packed together to get an image that measures 3024 by 4032 pixels. (Technically each pixel on that sensor is called a ‘photosite,’ as they collect, yes, photons)

You’d think a bigger sensor means more pixels — and indeed, a bigger sensor could allow you to pack in more pixels. But we’re at a point of diminishing returns in megapixel wars.

Instead, Apple decided to make the the photo sites bigger, because one most important aspects of image quality images (and really, life in general) is signal to noise.

Giving these sensor sites more room and making them larger makes them more sensitive to light. More light means more signal, less noise, and sharper results. Let’s look at a comparison:

Can you tell the difference? For reference, the iPhone 12 Pro Max is at the bottom. I can’t.

Here’s why we’re seeing stories that the camera is a minor difference at best: Most people who aren’t seeing the dramatic difference are shooting in daylight, with a fast ƒ/1.6 lens. On top of that, Apple’s intelligent image processing combines multiple shots together, which makes it harder to look into the hardware.

We get a better look with RAWs (shot with Halide, of course). RAWs omits steps like multi-exposure combination and noise reduction, there will be some noise; but that lets us see how much noise the camera really has to deal with.

Since the iPhone 12 has a bit more noise, there’s less detail. Still, the detail isn’t easy to spot in bright daylight. Everything changes between the iPhone 12 and 12 Pro Max the moment sunset begins. As shadows get darker and light gets dimmer, you can start seeing shifts in detail.

The difference gets dramatic as the sunset progresses:

Again, these are RAW files shot in Halide. Meanwhile, photos in the first-party Camera app get a bit… smudgy:

We can zoom in on this a bit to see that better:

It seems Apple’s Camera app applies just as much noise reduction to the 12 Pro Max image as it does for regular iPhone 12. This unfortunately ‘blurs’ superior details out of the iPhone 12 Pro Max.

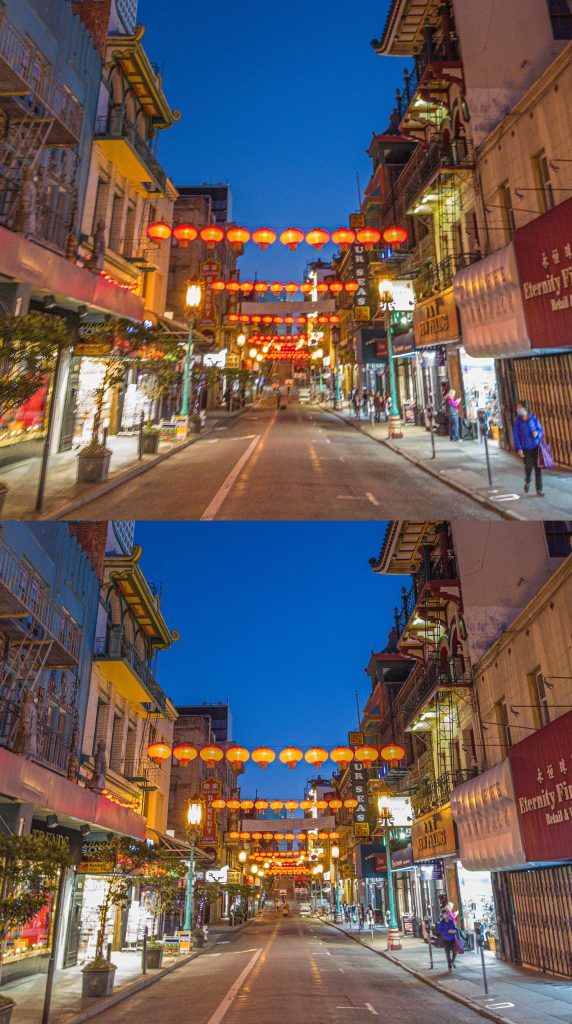

This is even more evident when you look at images closely and there’s even less light. Take this shot I took passing a restaurant in Chinatown (the owner has consented with me putting up this image):

Smart HDR rescues detail in the highlights and shadows here, but it is at the expense of a lot of sharpness.

Mind you, this is a really close-up zoom of a cellphone photo. Our expectation is that it is kind of mushy and lacks detail. Instead, the iPhone 12 Pro Max packs in incredible levels of detail down to the pixel level.

It might not pack more resolution in terms of the image size, but the resolution in that 12-megapixel image is far higher. There’s just much more detail in every shot, especially in RAW.

The iPhone 12 Pro shot of the same scene shows the kind of noise that it normally being smudged out:

Seeing how there’s just that much more detail in the raw image data from the iPhone 12 Pro Max, it could stand to have less processing to show off its higher signal to noise ratio. It’s possible Apple will tweak the image pipeline in the future to bring some of that detail out better.

In the meantime, we got RAW photography. Hooray!

Now, with the sun past the horizon, there’s no contest between the iPhone 12 and the Pro Max anymore. In these, close-to-nighttime to nighttime shots the Pro Max is a clear winner.

We’d say this sensor is a big leap ahead in image quality.

In our first look at the hardware changes, we said larger sensors offer a different ‘look’. That means more depth of field, but it may also render scenes differently. If you’re a photographer that has been in the field for long enough to have made the move from APS-C to full-frame, you might have hoped for a similar sudden, dramatic change of ‘look’. Sorry: that’s not happening here.

Sensor shift

We’d need to point out that all examples were shot handheld. While you could do a comparison of the iPhone 12 vs. the iPhone 12 Pro Max by putting it on a tripod, it’s kind of a pointless benchmark unless you mount your phone on a tripod whenever you shoot. We believe good comparisons should be done in real-world conditions, which means handheld shots.

That bring us to the next big change. Since the iPhone 7 Plus, there’s been magical stabilization technology that counteract shaky hand movements, so you can land sharper shots. However, the stabilization was previously applied to the optics system.

The iPhone 12 Pro Max applies stabilization to the sensor. Since this is a a light, flat plane, it’s technically feasible to move further and faster than an entire lens assembly.

At first you may think this will help video. In practice, video stabilization is absurdly good on as-is, thanks to intelligent software that smoothly crops and transforms your video frames, even as you run down a street. Stabilizing sensor can help, but only so much. This isn’t a Steadicam.

Low light photography is a different story. Software can’t un-shake your hand. Night Mode on iPhone gets around this by taking a ton of photos and just throwing out the ones that ended up too shaky.

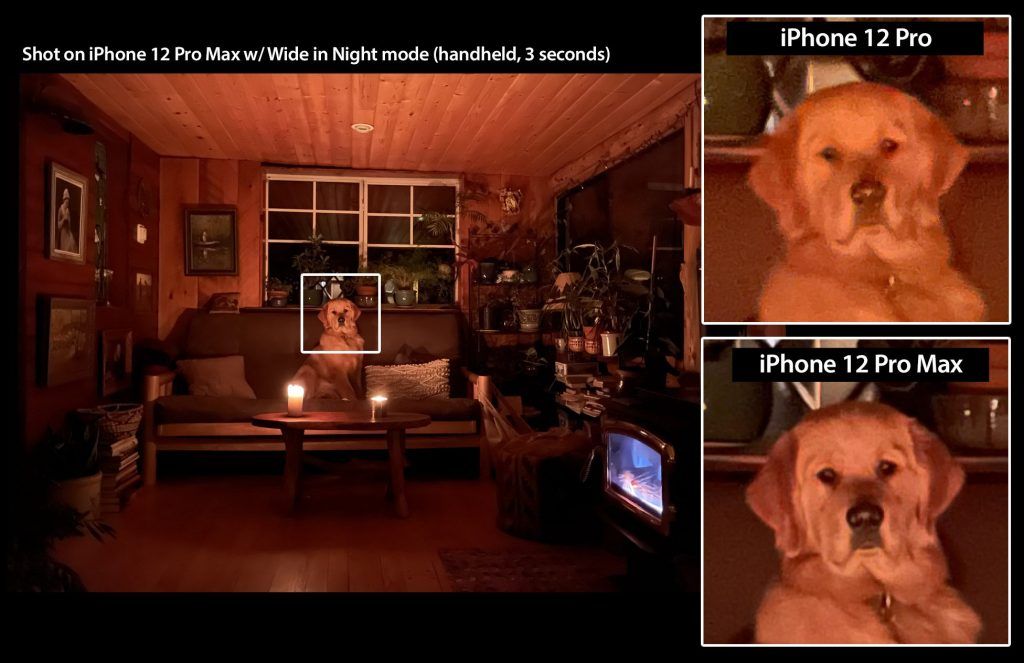

Now we see the iPhone 12 Pro Max pull ahead — way ahead, really — of its smaller iPhone 12 mini, 12 and 12 Pro siblings:

That’s a RAW shot. Taking a single frame, there’s a clear winner. But even in that multi-image-taking, auto-stabilizing Night Mode, the difference in detail is obvious:

(images via Austin Mann’s fantastic iPhone 12 Pro Max initial review)

Even though Night Mode involves heavy computational photography, it ultimately benefits from having sharper images to work with before it merges them into the final shot.

Achieving the impossible

The iPhone 12 Pro Max’ new sensor-shift technology really shines when you take away that computational magic and focus on traditional, single-shot photography.

Notice that sharp RAW shot we just looked at? That was taken at a fraction of a second. The longer you open the shutter, the more light you can let in, and the less noise you get.

With a traditional DSLR, nobody would dare take a one-second photos handheld.

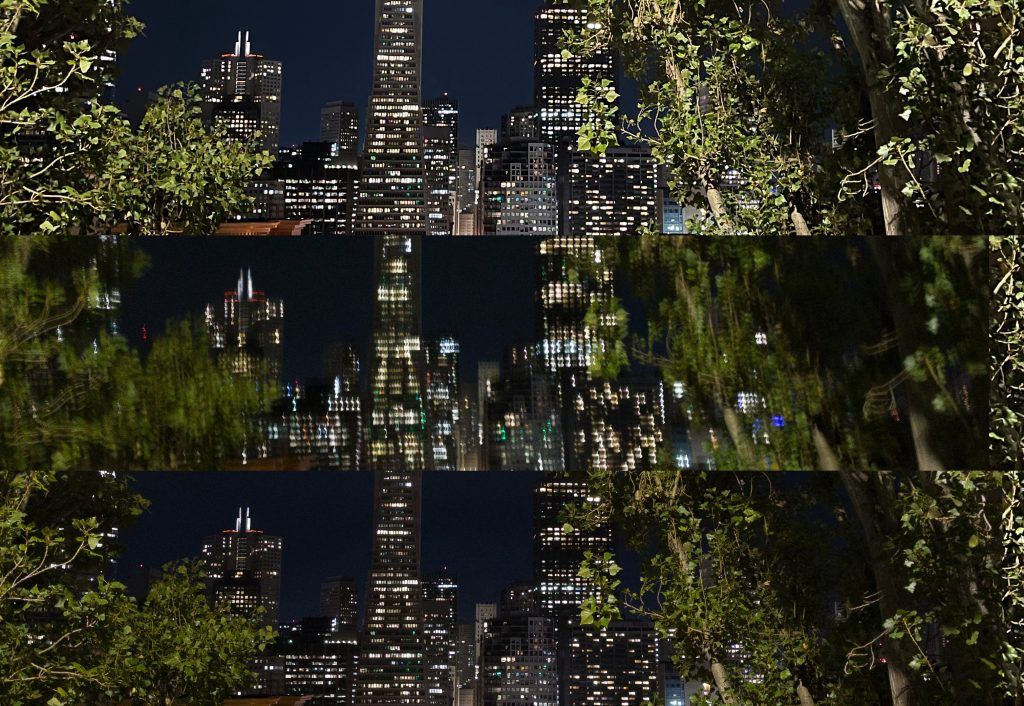

Yet the iPhone 12 Pro Max can take sharp images at ridiculous exposure times.

How about a third to half a second?

This looks like an image I get out of a modern mirrorless camera. Here’s a comparison with the Night Mode in the Camera app, and the (poor thing) iPhone 12 Pro:

In this last comparison, you can see that Night Mode can get this shot, but it sacrifices lots of fine detail and makes the scene look a bit unnatural. The iPhone 12 Pro, with its more basic stabilization, can’t get a steady frame.

It would be impossible to get a shot like this on the iPhone 12, 11 Pro, or heck, the high-end digital cameras I have lying around the studio. This is very cool stuff.

65mm lens

As a professed lover of the 52mm telephoto lens on the iPhone 11 Pro, I was particularly excited about having an even longer telephoto lens. Yes, it’s just a bit longer, now reaching 65mm — 2.5× instead of the previous 2× zoom — but reach is reach.

I quite enjoying getting the extra reach:

A longer lens in this case means a slower aperture, so a bit less light collected. That, too, isn’t that noticeable until sunset:

After all: less light collected means a bit more noise. That’s OK before it gets really dark: you can see what kind of noise the image pipeline has to deal with well in the comparison above.

The iPhone 11 Pro and 12 Pro both feature a regular 52mm (2×) lens with an ƒ/2.0 aperture, while the 12 Pro Max’s makes due with ƒ/2.2. In practice, that makes it worse at night. And on top of receiving less light, longer lenses amplify any camera shake.

With Night Mode coming to all lenses on the iPhone 12 except the telephoto lens, that makes it less than ideal when it’s dark out. I found that once the sun sets, you are sometimes better off using the new and incredibly sharp, stabilized wide angle sensor and cropping the result.

It seems the first party camera agrees with me. Once it gets dark out, open up the app, tap the “2.5x” button, and cover the telephoto camera (on the iPhone 12 Pro Max, the top lens) with your finger. You won’t see it covered.

In low light, the first-party camera quietly changes over to the wide angle lens, which receives more light. It then crops the result. It’s deception, sure, but it’s classic Apple: they know what’s best for you.*

*a note: We don’t do this in Halide, since knowing exactly what lens you’re shooting with is important for creative control. But we tackle a different audience than Apple. We really do believe that their way of auto-magically switching and swapping lenses is the right decision when you’re trying to serve the broadest possible audience.

An Aside about the future: ProRAW

A few days ago, Apple released a beta of iOS 14.3, which includes ProRAW. Initially teased during the announcement of the iPhone 12 Pro, ProRAW is a new format by Apple that ostensibly brings the best of the huge computational advancements in the iPhone camera to a format that offers complete creative control.

We’re writing a deep dive on what ProRAW means, but there’s one practical implication worth talking about today. One reason people choose RAW is for fine grained control over noise-reduction, and unfortunately ProRAW files have already had some noise reduction applied. Not as much the JPEGs of the past, but it’s there.

For this and a few other reasons, ProRAW is less of a ‘true’ RAW, and more of a very high quality format for your shots. Aside from the noise reduction, it does expose fine control over magical processes like Smart HDR.

So far ProRAW has shown to be fantastic quite often, but we’ve also observed tradeoffs. However, ProRAW is currently in beta. We’ve been submitting feedback to Apple. Once ProRAW ships, we’ll publish our analysis. That being said, we can say today — with great confidence — that ProRAW is coming to Halide.

Anyway, back to regular ol’ RAWs…

Halide and the iPhone 12 Pro Max

Halide, as a pro camera app, has never captured RAW files this great out of an iPhone by a wide margin. We’re incredibly excited to begin work on getting even better results out of this camera.

So let’s get started: we’ve just released Halide 2.0.4, with updates for the new iPhone 12 Pro Max.

We have improved framelines and lens controls for the new 65mm lens, tweaked exposure logic and more to take full advantage of this new phone and its tiny mini sibling. We’re not done there, though: there seems to be a lot left for us to squeeze out of this camera.

Conclusion

There are varied opinions about the iPhone 12 Pro Max. Is it a huge leap forward in iPhone camera quality? We say yes— the results speak for themselves.

But why did so many first impressions fail to pick up on the improved quality? It’s in the name of the device: iPhone 12 Pro Max.

As developers of a camera app, the results mind-blowing. It achieves images previously only seen in dedicated cameras, with sensors four times its size. It allows photographers to get steady and well exposed shots in conditions that weren’t imaginable a year ago. It captures low-light shots beyond anything we’ve seen on an iPhone. By a lot.

If you are an advanced user, the type that uses more than just the stock apps, this phone offers a lot.

Compare it to when Apple releases a new chip. They’re already ahead of the curve, and then they release an even newer chip that blows the old one away. Some will ask: “What can this even be used for?” Before you know it, you have console quality graphics and amazing AR experiences.

We’d compare this camera to a next-generation chip. In daily use, an average person won’t notice the signal to noise ratio or longer lens or superb stabilization and sensitivity. But as developers like us build apps for it, and this camera system works its way into future iPhones over the next few years, we can expect even the ‘traditional’ camera market to be forced to step up.

The iPhone 12 Pro Max is a Pro photographer’s iPhone. And we couldn’t be more excited to start building for it.