Two weeks ago, Apple dropped a new iPad Pro into our lives via their website. One of its headline features? A brand new camera array. In this quick article, we look at what’s changed, including a whole new “3d” sensor — and what this new technology enables.

If you’re just joining us: we’re the team that makes Halide, an iPhone camera app.

A New Camera Array

Does the new iPad Pro pack a great camera? We have to look at the whole package. Today’s high-end smartphones pack a cluster of cameras, with multiple lenses (and sensors!). Google entered the fray last year with the Pixel 3 double-shooter. Now the iPad Pro joins this camera superstructure elite.

Let’s start at the ‘primary camera’:

The Wide Angle

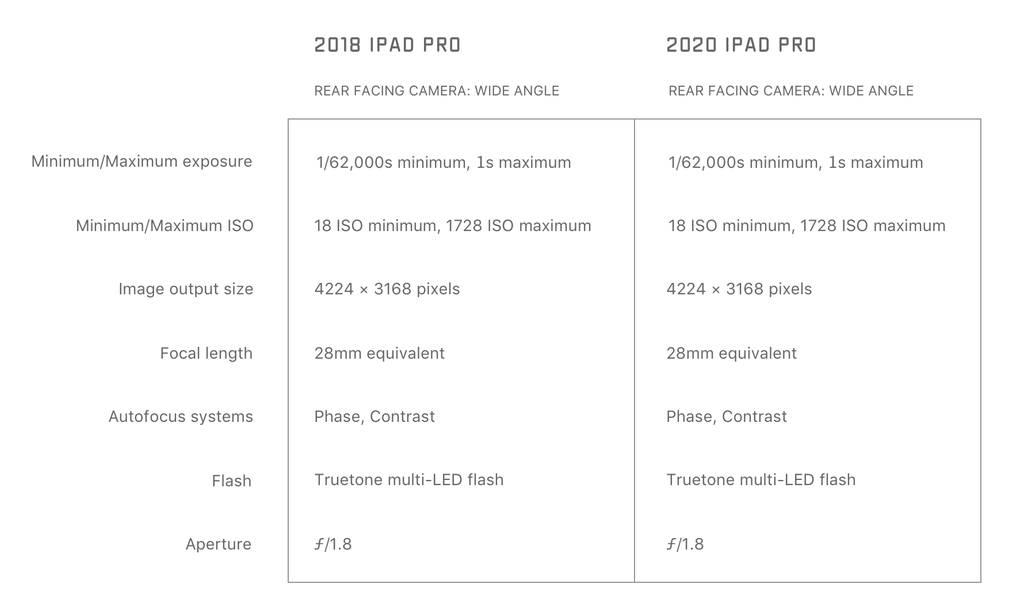

Not to be confused with the ultra-wide angle lens we’ll get to shortly, this 28mm wide-angle camera is similar to all cameras ever put on the iPad, going back to the iPad 2. It’s table stakes. How does it compare?

iPad Pro 2020 vs iPad Pro 2018

Shot head-to-head with the iPad Pro that preceded it, the changes are… minimal. It appears the sensor and lens is either identical or almost identical to the last generation iPad Pro. They certainly match physically, with new accessories like the upcoming magic keyboard fitting both generation iPads.

The camera module hasn’t moved much; it just grew a little bit, adding two new sensors to its mesa-like structure.

Processing appears a little different. The sensors show wider sensitivity to light with their ISO ranges, with low-light image processing slightly improved.

These minor changes are likely only software. Big changes like ‘Deep Fusion’ typically require new chips, but the iPad Pro’s chipset is basically identical to the 2018 model, with an extra GPU core enabled.

Also unchanged? The lens focal length, which determines the field of view. iPhone XS and XR moved to an ever-so-slightly wider 26mm lens; this carried over to the iPhone 11 and 11 Pro, with their ultra-wide lens being exactly half that at 13mm.

Not the iPad Pro. iPhone 8 and iPhone X had 28mm lenses — and so does this iPad Pro, like the iPad before it.

Here’s our full Technical Readout and comparison:

If you need something to compare it to, it’s the iPhone 8 camera. Don’t expect parity with Apple’s latest iPhone 11 Pro shooters, but it’s still a great set of cameras.

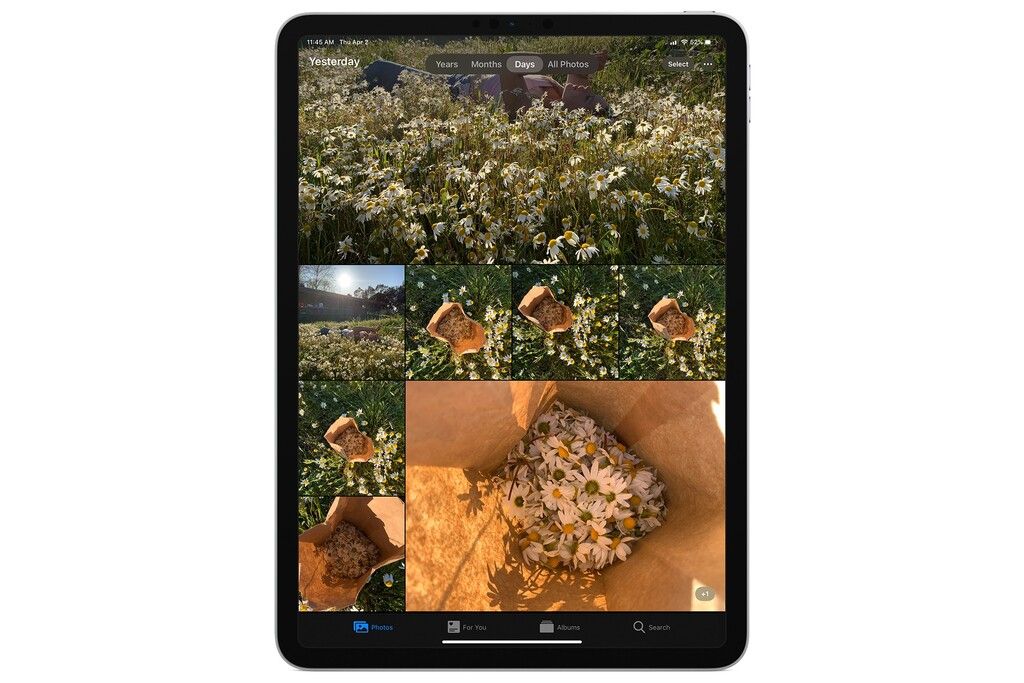

(Yes, our test setting definitely looks like a picturesque Apple marketing mockup.)

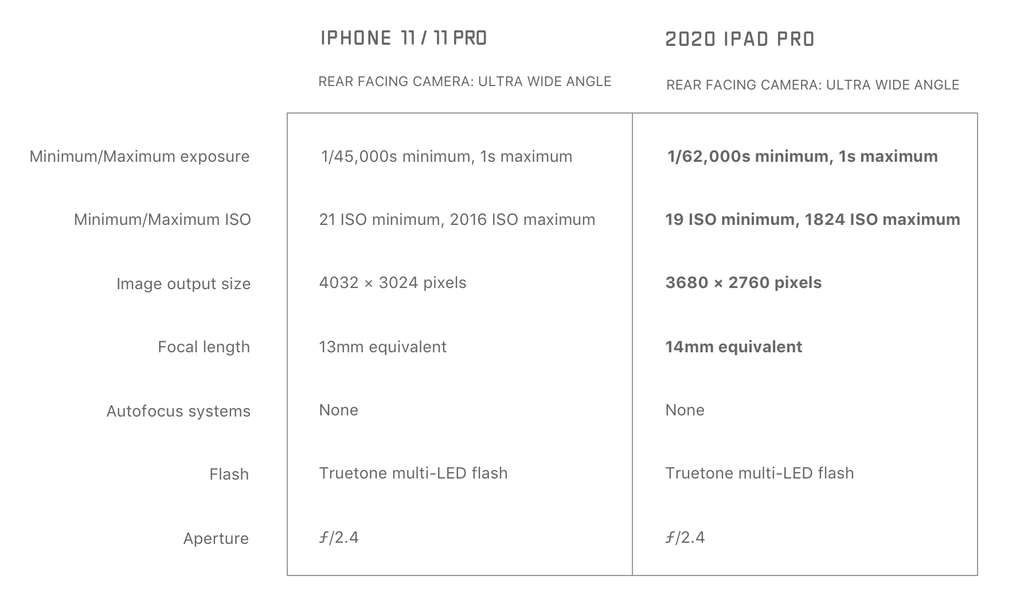

The ultra wide angle camera

Here’s a big and obvious change: this is the first iPad to have a second camera. The ultra-wide, famous from last year’s iPhone 11 and iPhone 11 Pro arrives on the iPad. There’s some changes compared to the iPhone, however:

Remember when I said the iPad Pro’s wide angle lens was a bit less wide than the iPhone XS/XR/11 series of phones? That applies here, too: the ultra-wide has a grand difference of 1mm with the iPhone 11 and 11 Pro’s ultra-wide lens, bringing it to a tidy 14mm. In daily usage, it’s not noticeable.

What’s most interesting is the mere 10 megapixel resolution. It’s up from previous iPads, which packed an 8 megapixel rear camera, but this is the lowest resolution on a “new” rear camera since the iPhone 6.

Of course course megapixels are only one factor in an image, and don’t always tell the full story. So let’s compare:

In short, the iPhone 11 and 11 Pro pack a significantly larger (and better) sensor with its wide-angle camera, compared to iPad. The ultra-wide sensor on iPhone is comparable to the ultra-wide on iPad in quality, but the iPad is lower resolution.

It appears the hardware just isn’t there to support night mode, Deep Fusion, and even portrait mode. The iPhone XR pulled off single-camera Portrait Mode thanks to a larger sensor, and tapping into focus pixels — we even got single camera Portrait mode working fornon-human subjects in Halide!).

Some have asked if it’s possible to add portrait mode with the third little disc on the back of this new iPad, which is the…

The Depth Sensor

Here’s the biggest and totally new thing, then: The LIDAR sensor.

This is the first time a new imaging capture technology has appeared on iPad before iPhone. So we were a bit surprised by a lack of marquee applications to show off its potential. The AR Measure app that Apple bundles makes handy use of it:

This is the first time a new imaging capture technology has appeared on iPad before iPhone. So we were a bit surprised by a lack of marquee applications to show off its potential. The AR Measure app that Apple bundles makes handy use of it:

How LIDAR Works

The LIDAR sensor, also known as a 3D ‘Time of Flight’ sensor (ToF for short) is a sensor that is exceptionally good at detecting range.

Regular camera sensors are good at focused images, in color. The LIDAR sensor doesn’t do anything like this. It emits small points of light, and as they bounce off your surroundings, it times how long it took the light to come back.

This sounds crazy, but it’s timing something moving at the speed of light. This window of time that amount to hundreds of picoseconds. Pico? Yes, pico — that’s an order of magnitude smaller than nanoseconds! A picosecond is 0.000000000001 seconds. Count the zeros.

If this sounds familiar to all you rocket scientists out there, the name LIDAR is a play on RADAR, but instead of using RAdio waves, it uses infrared light.

Could this Power Portrait Mode?

Today, the iPad doesn’t support portrait mode on the rear cameras. Could they fix this in a software update in the future, by using this depth data? While I wouldn’t say it’s impossible, it’s very unlikely.

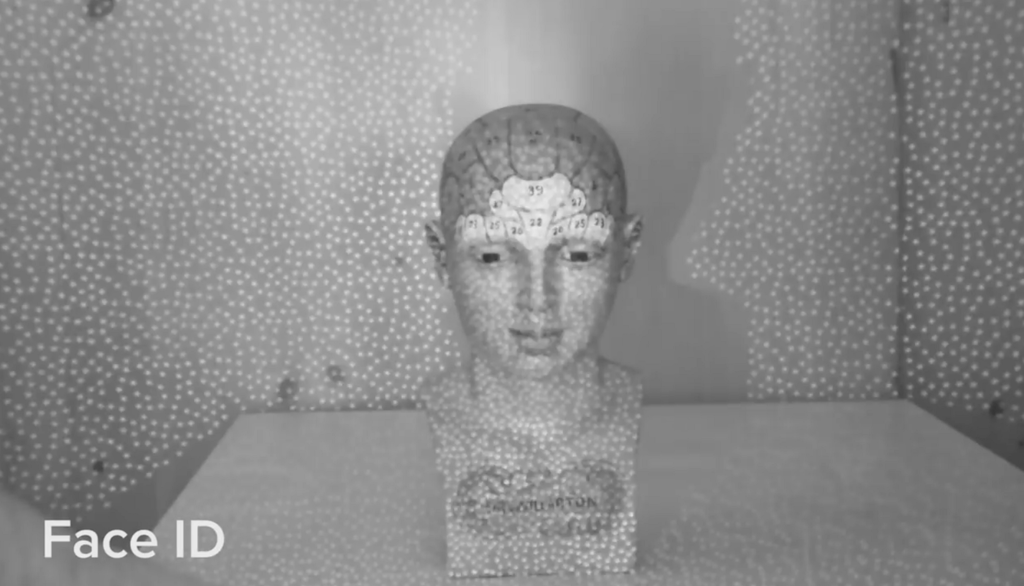

The problem is that the depth data just isn’t high resolution. This fantastic experiment from iFixit shows how big the dot pattern is that this sensor projects:

The Face ID sensor is trying to only resolve an area the size of a human face, with enough accuracy you can use it as a security device. The LIDAR sensor is made for room-scale sensing. It’s basically optimized for scanning rooms in your house.

The only reason we won’t say it could never support portrait mode is that machine learning is amazing. The depth data on the iPhone XR is very rough, but combined with a neural network, it’s good enough to power portrait mode. But if portrait mode were a priority, we’d put our money on Apple using the dual-cameras.

How We Can Use LIDAR

With Halide, we’d love to use the depth data in interesting ways, even if it’s low resolution. There was only one problem: there are no APIs for us as developers to use to get access to the underlying depth data. They only expose the processed 3d surface.

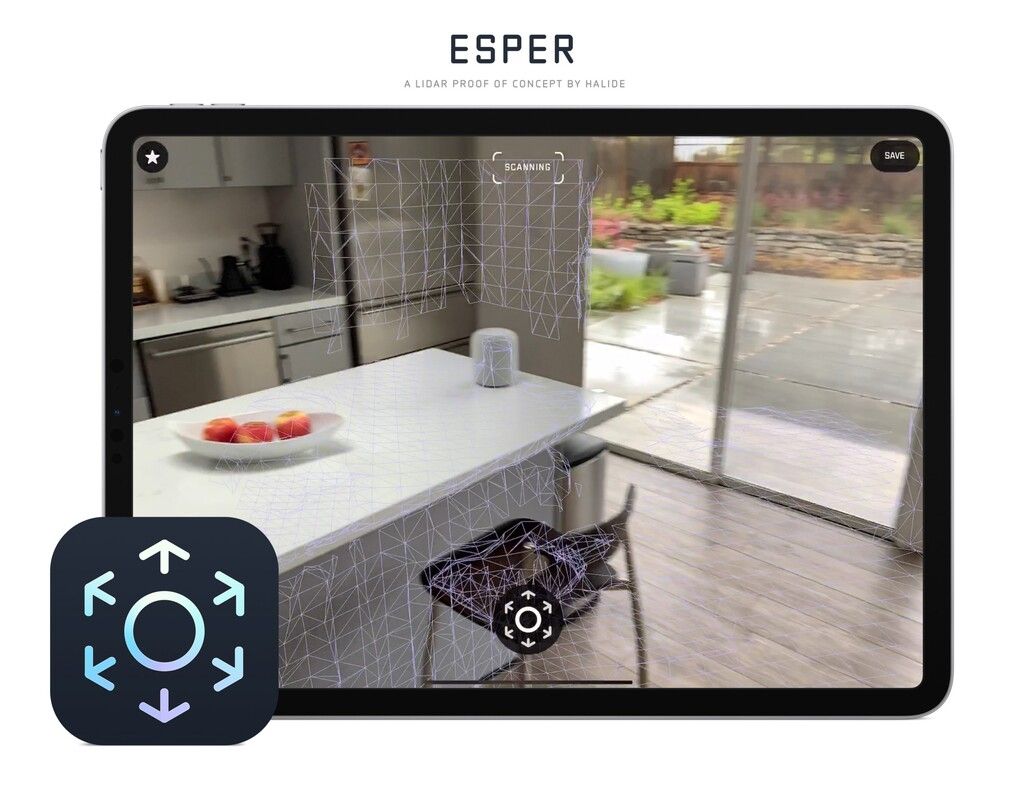

What if we re-thought photographic capture, though? We built a proof-of-concept we’re calling Esper.

Esper experiments with realtime 3D capture using the cameras and LIDAR sensor at room scale. It’s a fun and useful way to capture a space.

In the GIF above, we scan a kitchen. Panning around helps the resolution of the LIDAR sensor immensely, as you can see it forming — and refining a 3D mesh of its surroundings. When you tap the capture button, it textures the 3D model, using the camera data.

Once captured, you can view the space in 3D or in AR. You can change the way it looks:

And thanks to it being a three dimensional capture, you can even walk around in it!

The smallest ‘resolution’ we found this to be feasible in is about furniture-size. This chair captures pretty well:

If you’re interested in seeing how this works, download one of Ben’s chairs that he ‘captured’ in Esper. (insert ‘you wouldn’t download a car’ joke here)

You can view it on any device and even place it into the ‘real world’ using AR. Thanks to the LIDAR sensor, its scale is accurate.

That’s Esper! It’s our quick proof of concept to show that while this new LIDAR sensor isn’t something that is (currently) able to augment our ‘traditional’ photography, it opens the door to new applications that are powerful and creativity-enabling in their own right.

Let us know what you think of Esper on Twitter!

We think this is a super exciting thing about the way camera hardware is evolving. It’s not simply new sensors used to augment photography or video capture as we know it: it will enable entirely new ways to capture reality around us.

It’s up to us as developers to re-imagine the art of capturing the reality around us and give users the tools to explore what’s possible.

Your questions

We asked for some questions, and we got them. Let’s answer some:

With LIDAR, this iPad is easily Apple’s best device for sensing three-dimensional space. Whether it comes to measurements or AR, there’s no contest. Outside of that, your several-year old iPhone will still take better photos.

Ah, objects. Unfortunately, the mesh output by the system right now isn’t accurate enough to send to a 3d printer. If you look at Ben’s chair scan, you’ll see that the surface is rough, and it has trouble with details like chair legs. But it’s a great starting point for a 3d model, since all the proportions will be very accurate.

We’re super excited as photographers and developers to see what the next few years bring . Photography isn’t traditionally taking photos anymore; it’s combining all the data and smarts in our devices into allowing totally new interpretations of what ‘photography’ can mean. And if you’re not excited about that, we’re at a loss!

No optical image stabilization (OIS) was added, and while it’s not exactly missed at the ultra-wide end (the wider the lens, the less OIS is necessary), it’s a bummer that the camera did not improve at all on the wide end. Next year?

Ah, the hard hitting questions.

Unless the inside of your mouth is about the scale of a room (like a blue whale), you could conceivably do that a lot better with the infrared, front-facing TrueDepth camera on your iPhone X (or newer). Halide will let you capture the raw depth data. Let us know how it goes!

Wrapping up

Thanks for your questions, we hope you enjoyed our regular virtual ‘tear down’ of this camera module! We’re excited to see cameras get ever more interesting with additional sensors and modules.

Happy snapping!